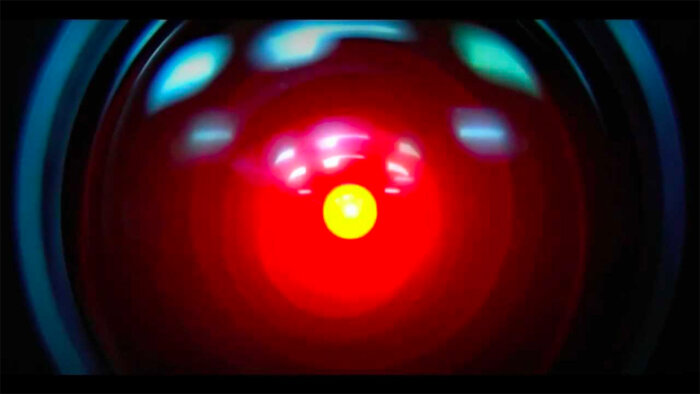

AI knows, or will soon know, practically everything. The most knowledgeable people in the world readily admit that AI knows more than they do – about their own specialties.

How could it be otherwise? AI has access to all the information on the internet. In today’s world, that’s tantamount to saying it has access to all information, period, with the possible exception of personal information like what you ate for breakfast this morning and classified information like the underwater location of America’s nuclear submarines at any given instant.

Apart from those narrow exceptions, AI already knows – or will soon know – more about quantum mechanics than the leading physicists. More about tax law than the best tax lawyers. More about biology than the brightest biologists.

It’s not just me saying this. Business technology thinkers like Elon Musk and Sam Altman, who know a lot more about AI and a lot more about business than I do, say the same. (Interestingly, most of those deep thinkers are conservative/libertarian in their politics.)

Here are the implications. We are fast approaching the day when people with knowledge will not be able to command a premium for their services. Employers needing knowledge will not pay someone for it; they’ll simply ask AI for it. The knowledge they get from AI will not only be less expensive, but also more accurate.

Granted, we aren’t at that point yet – today’s AI makes too many mistakes – but we soon will be.

This phenomenon is likely to accelerate. AI will acquire more human knowledge and will also start to interpret that knowledge to produce knowledge that humans themselves don’t have.

In some cases, AI will produce knowledge that humans cannot even comprehend. When AI figures out how the universe began, don’t expect to understand its explanation.

That’s the fate of knowledge. Factual knowledge will be the province of machines, not humans.

Now let’s look at another quality that employers currently pay for: Hard work.

In the past, hard workers were paid more, just as knowledgeable ones were. That’s because hard workers produced more for the company. Other things being equal, someone who put in 46-hour weeks got paid more than someone who put in 29-hour weeks.

Take an analytical problem that is difficult but susceptible to resolution. Imagine that a team of humans would require, say, 1000 manhours to solve it. The employee working 46-hour weeks will contribute much more to that solution than the one working 29-hour weeks.

In the future, however, the hard work of the AI machine will dwarf both those workers. AI could solve that 1000-hour problem in seconds – and without the drama, sex harassment lawsuits, maternity leaves, labor strikes, water cooler gossip about the boss and expensive office space associated with the team of humans.

To the accuracy of a tiny rounding error, those two workers – the 46-hour worker and the 29-hour worker – become equally valuable or, more accurately, equally valueless.

Human hard work will thus go the way of human knowledge. Just as backbreaking work in the mines and the fields became obsolete with the advent of tunnel digging machines and farm equipment, hard work of other kinds including office work will become obsolete with the advent of AI machines.

This presents a dilemma. If employees are not differentiated by their knowledge or their hard work, then how will they be differentiated in their salaries? How will the market decide to pay Jane a million a year, and pay Charlie only a few hundred thousand? (Yes, workers’ compensation will increase dramatically due to the incredible efficiencies that AI brings to bear.)

We already see this problem in schools. How do you differentiate students when they’re all using AI to take the test for them and all the answers are right?

Stated another way, if AI can answer questions and perform work assignments unimaginably fast, what can AI not do? What’s left for us humans?

Here’s what. AI cannot weigh human values.

A character in an Oscar Wilde play complained about people who “know the price of everything and the value of nothing.” That character might have been anticipating AI by a century and a half.

Oh sure, AI is fully capable of determining value in a cost-per-pound or other quantifiable way. But it is incapable of possessing or assigning “values” in a human sense. It is consequently incapable of weighing those human values in its analysis.

Here’s an example, back to Elon Musk. He has about a million children at last count (actually, the figure is ten, officially, according to AI) born to sundry mothers. And he has something like a half-trillion dollars.

AI can figure out how to distribute his billions to his children over time in a way that minimizes the tax consequences. (Don’t worry, the taxes will still be astronomical.)

But here’s a question that AI is incapable of figuring out. Is it a “good” thing for Musk’s kids to receive a multi-billion-dollar inheritance? More specifically, will such a windfall enhance the values that we humans call “happiness” and “fulfilment”? Relatedly, are such inheritances “good” for society?

My human instinct is that the answer depends on lots of circumstances, including especially the nature of each kid. For some kids, such an inheritance would be a “good” thing for them, and perhaps for humanity, too, though there might also be some bad aspects to it. For other kids, maybe not.

To answer this question, you need to understand human nature, you need to understand kids, and you need to understand that people change as they grow up, sometimes in unpredictable ways.

AI will always have a poor grasp of such things. Such things are and will remain the province of humans. They entail something AI will never have, no matter how fast or knowledgeable it becomes. They entail wisdom.

Will society find a way to compensate people for wisdom after AI renders human knowledge and hard work obsolete? I don’t know, and neither does AI. Maybe the compensation for wisdom is just the joy and the pain of having it.